Can Cui (崔璨)

Research Scientist in VLA

Bosch Center for Artificial Intelligence (BCAI)

Bosch Center for Artificial Intelligence (BCAI)

![]() Ph.D. Purdue University

Ph.D. Purdue University

Email: cancui19 [at] gmail [dot] com

Office: 384 Santa Trinita Ave, Sunnyvale, CA 94085

I am a Research Scientist in Vision-Language Action (VLA) Models at the  Bosch Center for Artificial Intelligence (BCAI), developing foundation models for personalized, interpretable, and safe autonomous driving. My prior industry experience includes serving as an AI Research Intern at

Bosch Center for Artificial Intelligence (BCAI), developing foundation models for personalized, interpretable, and safe autonomous driving. My prior industry experience includes serving as an AI Research Intern at  Toyota InfoTech Labs and as a Controls Research Intern at

Toyota InfoTech Labs and as a Controls Research Intern at  Cummins Inc., developing control algorithms and digital twin validation for physical systems. I earned my Ph.D. from

Cummins Inc., developing control algorithms and digital twin validation for physical systems. I earned my Ph.D. from ![]() Purdue University, advised by Dr. Ziran Wang, where my research focused on human–autonomy teaming, multimodal perception, and digital twin–based validation for autonomous vehicles. My work spans LLMs/VLMs, VLA, human-autonomy teaming, generative motion planning, control, and data-driven autonomy.

Purdue University, advised by Dr. Ziran Wang, where my research focused on human–autonomy teaming, multimodal perception, and digital twin–based validation for autonomous vehicles. My work spans LLMs/VLMs, VLA, human-autonomy teaming, generative motion planning, control, and data-driven autonomy.

News

| Jan 5, 2026 |

I start working for  Bosch Center for Artificial Intelligence (BCAI) as a Research Scientist in Vision-Language Action (VLA) Models!🎉 Bosch Center for Artificial Intelligence (BCAI) as a Research Scientist in Vision-Language Action (VLA) Models!🎉

|

|---|---|

| Dec 1, 2025 | I successfully defend my Ph.D. dissertation on “Foundation Models for Human-Autonomy Teaming in Autonomous Vehicles”! 🎉 |

| Oct 8, 2025 | I will serve as the Guest Editor of the JCAV Focus Issue on Large Language and Vision Models for Connected and Automated Vehicles!🎉 |

| Sep 10, 2025 | One paper is accepted in 2025 ENMLP!</a>! 🎉 |

| Jan 17, 2025 | I will serve as the General Chair of the CVPR 2025 Workshop on Distillation of Foundation Models for Autonomous Driving. See you in Nashville!🎉 |

Selected Publications

* indicates equal contributions.Please check my Google Scholar for the complete list of publications.

-

P-IEEE

LLM4AD: Large Language Models for Autonomous Driving – Concept, Review, Benchmark, Experiments, and Future TrendsIn Proceedings of the IEEE (P-IEEE) , 2025

P-IEEE

LLM4AD: Large Language Models for Autonomous Driving – Concept, Review, Benchmark, Experiments, and Future TrendsIn Proceedings of the IEEE (P-IEEE) , 2025 -

EMNLP

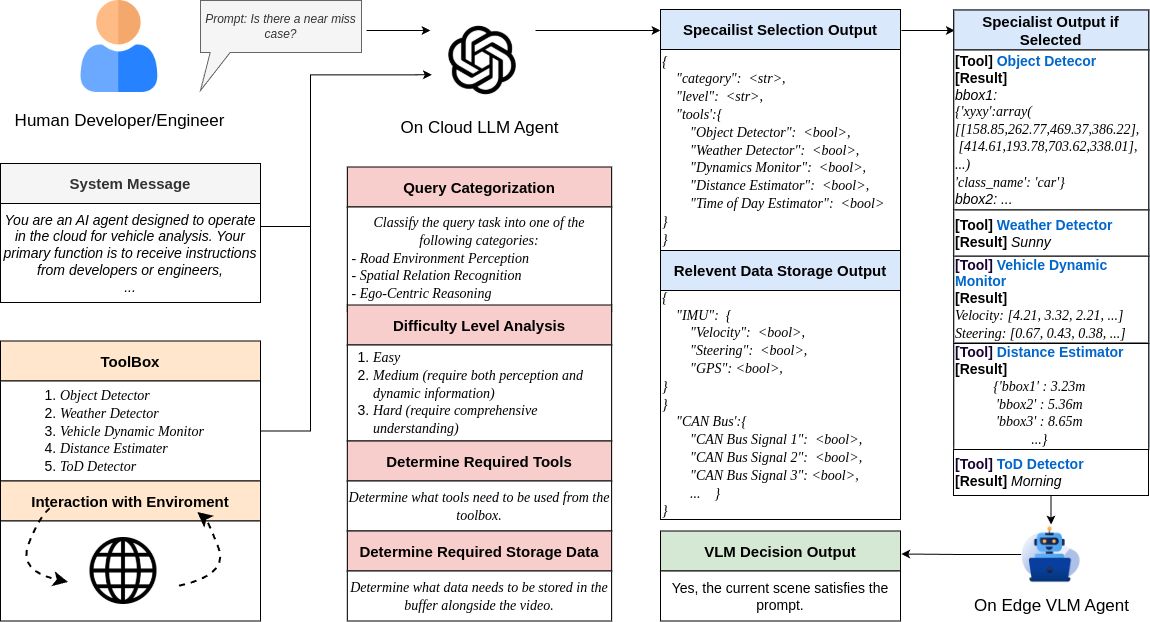

DASR: Distributed Adaptive Scene Recognition-A Multi-Agent Cloud-Edge Framework for Language-Guided Scene DetectionIn Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing: Industry Track (EMNLP) , 2025

EMNLP

DASR: Distributed Adaptive Scene Recognition-A Multi-Agent Cloud-Edge Framework for Language-Guided Scene DetectionIn Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing: Industry Track (EMNLP) , 2025 -

ITSC

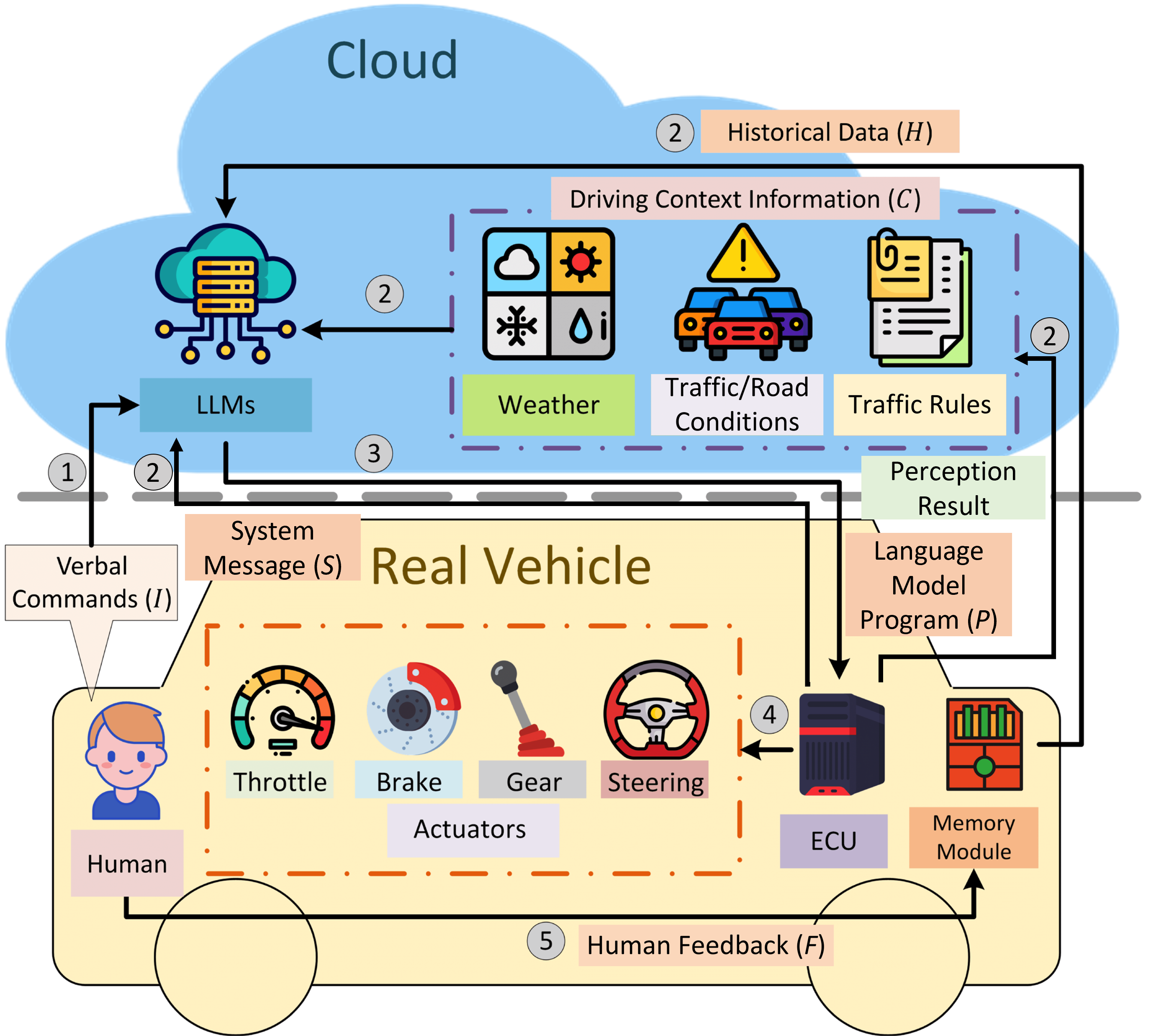

Personalized Autonomous Driving with Large Language Models: Field ExperimentsIn 2024 IEEE 27th International Conference on Intelligent Transportation Systems (ITSC) (ITSC) , 2024

ITSC

Personalized Autonomous Driving with Large Language Models: Field ExperimentsIn 2024 IEEE 27th International Conference on Intelligent Transportation Systems (ITSC) (ITSC) , 2024 -

CVPR

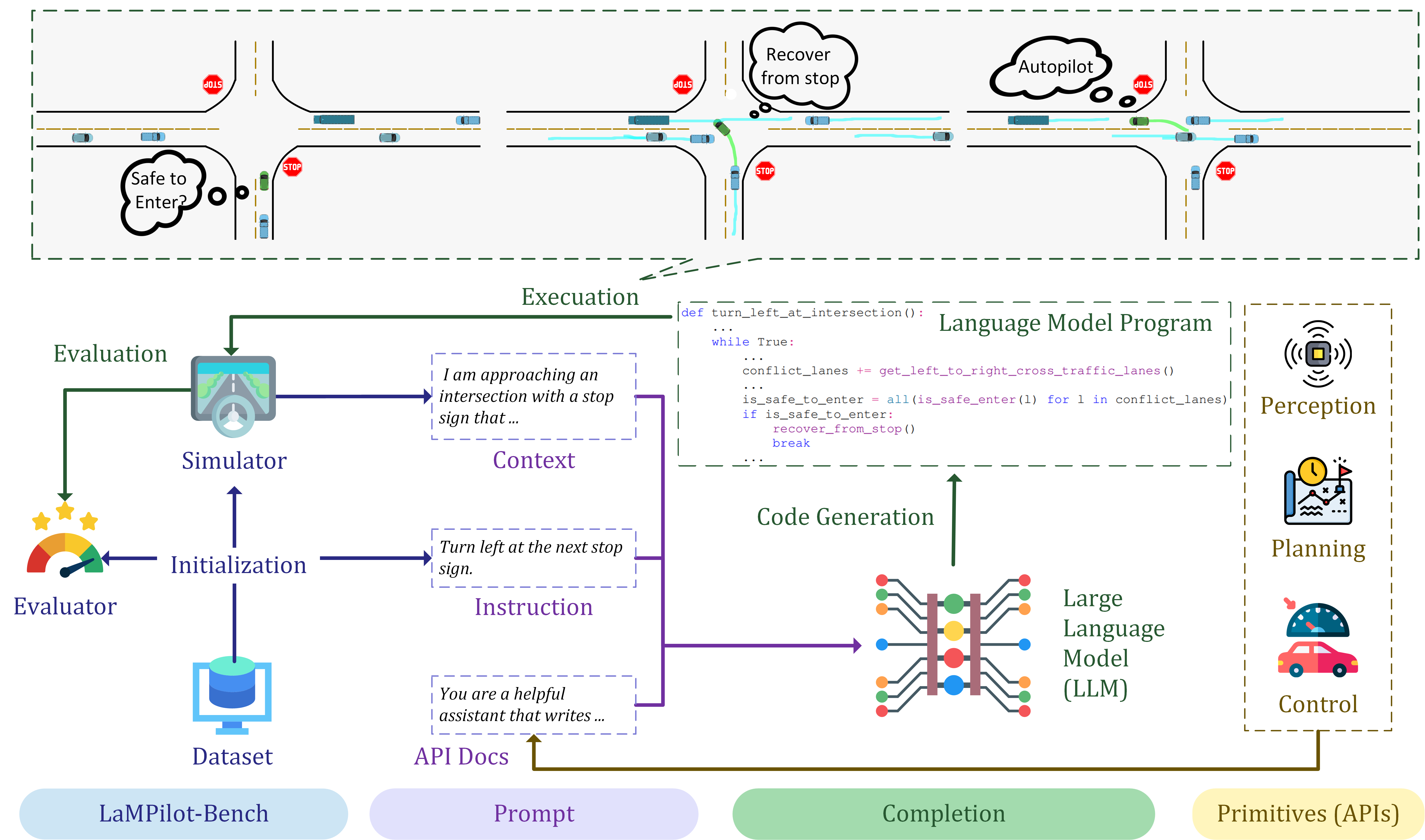

LaMPilot: An Open Benchmark Dataset for Autonomous Driving with Language Model ProgramsIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , 2024

CVPR

LaMPilot: An Open Benchmark Dataset for Autonomous Driving with Language Model ProgramsIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , 2024

T-IV

T-IV