Publications

2025

-

P-IEEE

LLM4AD: Large Language Models for Autonomous Driving – Concept, Review, Benchmark, Experiments, and Future TrendsIn Proceedings of the IEEE (P-IEEE) , 2025

P-IEEE

LLM4AD: Large Language Models for Autonomous Driving – Concept, Review, Benchmark, Experiments, and Future TrendsIn Proceedings of the IEEE (P-IEEE) , 2025 -

EMNLP

DASR: Distributed Adaptive Scene Recognition-A Multi-Agent Cloud-Edge Framework for Language-Guided Scene DetectionIn Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing: Industry Track (EMNLP) , 2025

EMNLP

DASR: Distributed Adaptive Scene Recognition-A Multi-Agent Cloud-Edge Framework for Language-Guided Scene DetectionIn Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing: Industry Track (EMNLP) , 2025

2024

-

ITSC

Personalized Autonomous Driving with Large Language Models: Field ExperimentsIn 2024 IEEE 27th International Conference on Intelligent Transportation Systems (ITSC) (ITSC) , 2024

ITSC

Personalized Autonomous Driving with Large Language Models: Field ExperimentsIn 2024 IEEE 27th International Conference on Intelligent Transportation Systems (ITSC) (ITSC) , 2024 -

CVPR

LaMPilot: An Open Benchmark Dataset for Autonomous Driving with Language Model ProgramsIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , 2024

CVPR

LaMPilot: An Open Benchmark Dataset for Autonomous Driving with Language Model ProgramsIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , 2024 -

ITSM

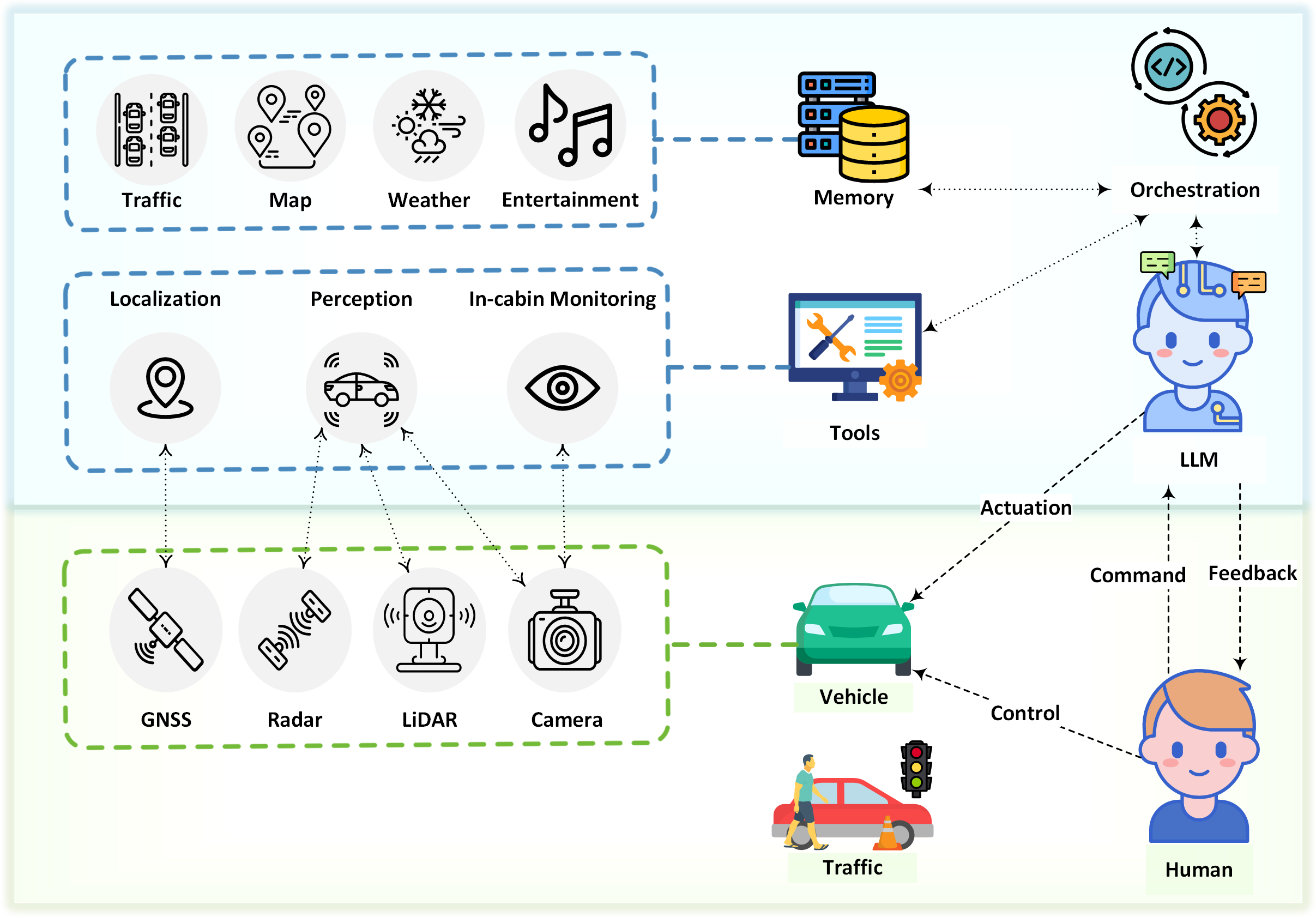

Receive, Reason, and React: Drive as You Say with Large Language Models in Autonomous VehiclesIn Proceedings of IEEE Intelligent Transportation Systems Magazine (ITSM) , 2024

ITSM

Receive, Reason, and React: Drive as You Say with Large Language Models in Autonomous VehiclesIn Proceedings of IEEE Intelligent Transportation Systems Magazine (ITSM) , 2024 -

WACV

Drive as You Speak: Enabling Human-Like Interaction with Large Language Models in Autonomous VehiclesIn Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) , 2024

WACV

Drive as You Speak: Enabling Human-Like Interaction with Large Language Models in Autonomous VehiclesIn Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) , 2024

2023

-

WACV

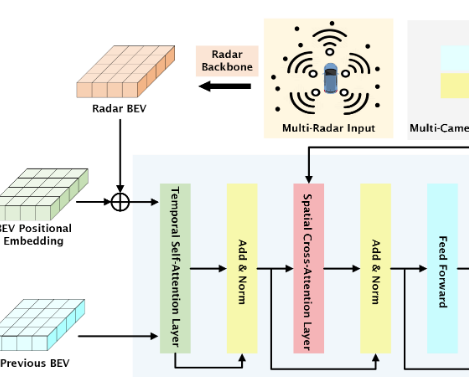

MACP: Efficient Model Adaptation for Cooperative PerceptionIn Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) , 2023

WACV

MACP: Efficient Model Adaptation for Cooperative PerceptionIn Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) , 2023 -

SEC

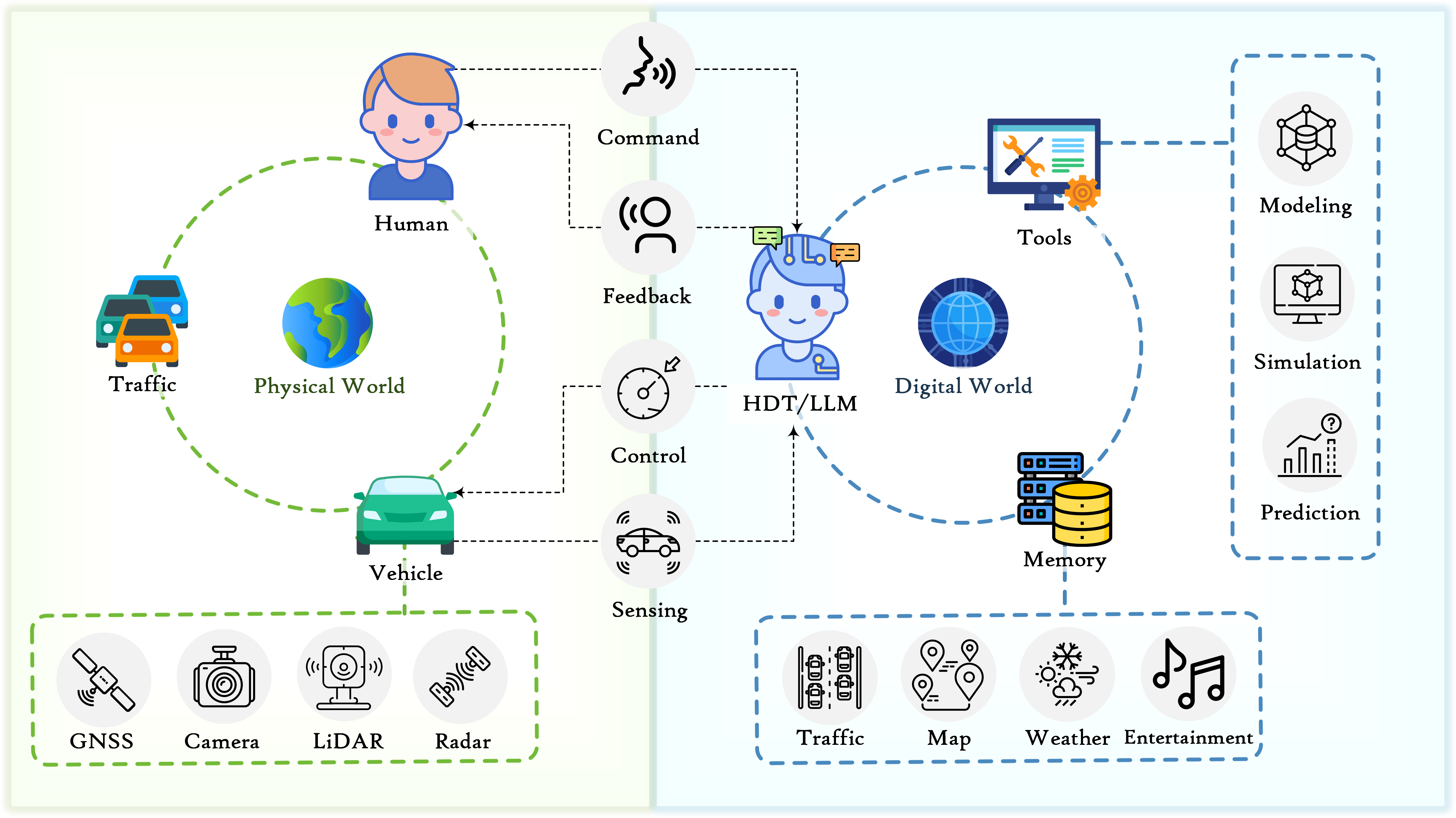

Human-Autonomy Teaming on Autonomous Vehicles with Large Language Model-Enabled Human Digital TwinsIn Proceedings of the ACM/IEEE Symposium on Edge Computing (SEC) , 2023

SEC

Human-Autonomy Teaming on Autonomous Vehicles with Large Language Model-Enabled Human Digital TwinsIn Proceedings of the ACM/IEEE Symposium on Edge Computing (SEC) , 2023 -

ITSC

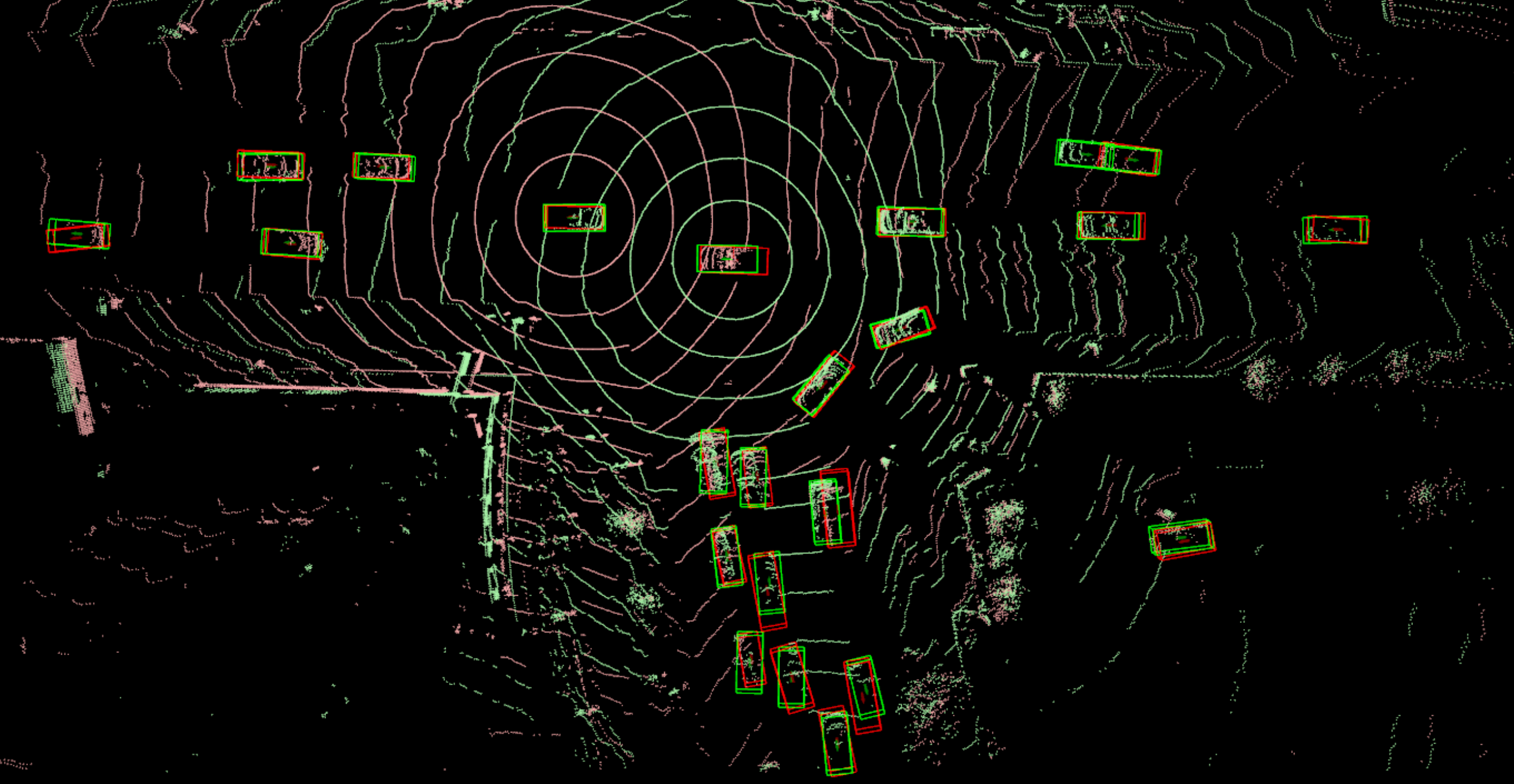

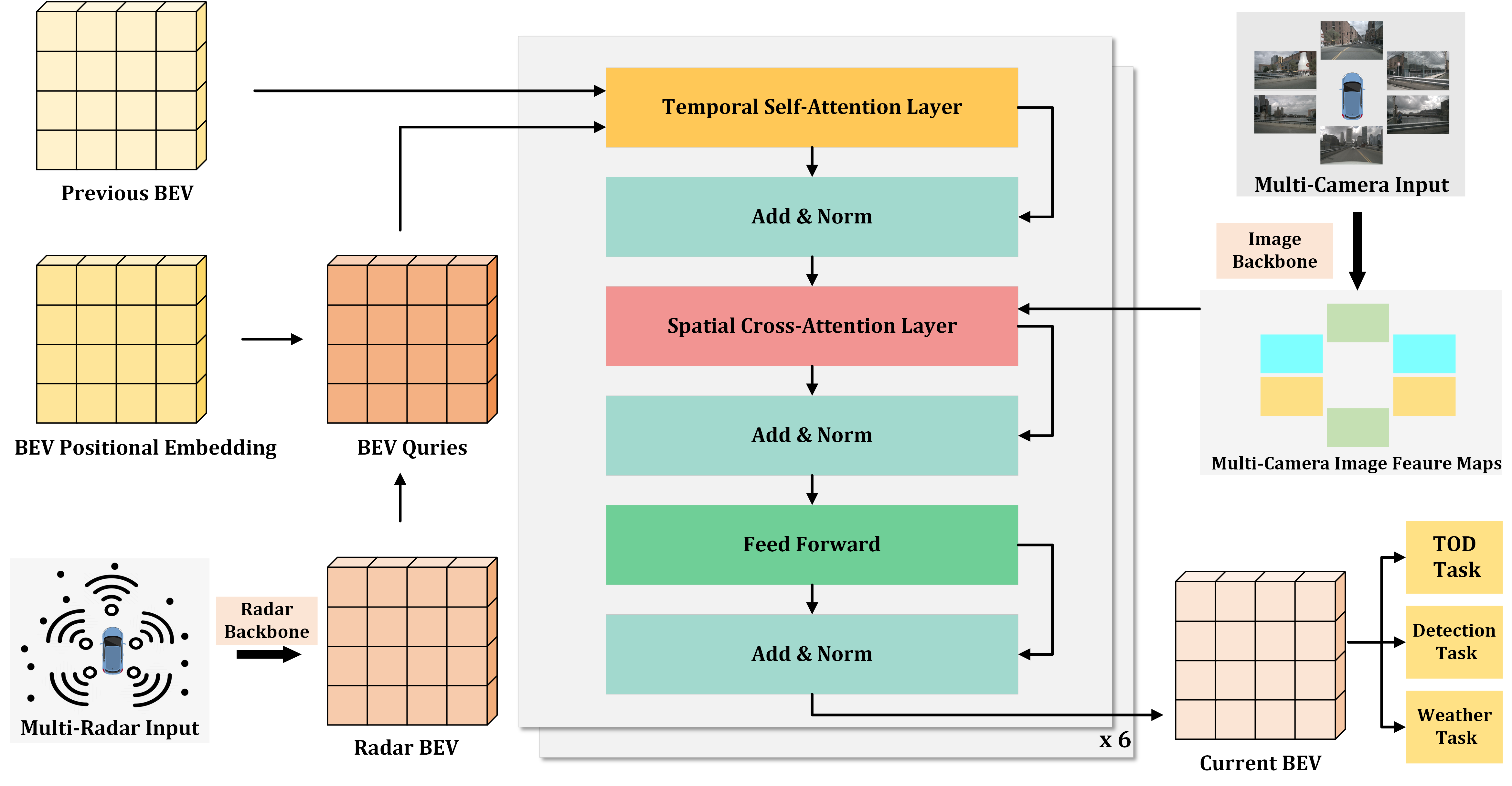

Radar Enlighten the Dark: Enhancing Low-Visibility Perception for Automated Vehicles with Camera-Radar FusionIEEE International Conference on Intelligent Transportation Systems (ITSC) , 2023

ITSC

Radar Enlighten the Dark: Enhancing Low-Visibility Perception for Automated Vehicles with Camera-Radar FusionIEEE International Conference on Intelligent Transportation Systems (ITSC) , 2023

·

T-IV

T-IV